The Self-Propelling Evolution of AI Models

How AI models evolve themselves, creating exponential knowledge growth inversely proportional to model lifespan

How AI models evolve themselves, creating exponential knowledge growth inversely proportional to model lifespan

Artificial Intelligence has been evolving at an unprecedented speed, where new models emerge with increasingly advanced capabilities that often build directly on the successes and limitations of their predecessors. The very models created through AI research and development contribute to the next generation of AI innovations, creating a feedback loop of rapid progression. This creates a dynamic where each new discovery or breakthrough has a shrinking "time-to-replace" interval, as new models quickly supersede older ones

An important measure of AI evolution speed is the lifespan of AI models and hardware used for their training. Recent industry data shows that leading AI chip designs have a median functional lifespan of approximately 2 to 3 years, with high-end datacenter GPUs potentially lasting as little as 1 to 3 years under heavy computational loads typical of training large AI models. The intense demand for performance causes rapid obsolescence not only of hardware but also of software models, which are rapidly replaced by more efficient, capable architectures.

This rapid turnover cycle means new discoveries and AI models are outpaced quickly, resulting in knowledge and technological assets becoming outdated faster than in previous technological eras.

As AI exponentially increases its capabilities—through better algorithms, more data, and stronger compute power—the useful lifespan of any particular AI model or scientific discovery decreases. This phenomenon is indirectly proportional: the faster AI capability grows, the shorter the time before a current state-of-the-art becomes obsolete.

For instance, breakthroughs like protein folding with AlphaFold revolutionized biology but are quickly followed by new generative models and autonomous agents, which in turn accelerate further invention cycles.

The traditional concept of news and discoveries having a lasting impact period is increasingly challenged by AI-driven rapid innovation. The time between a discovery becoming newsworthy and it being supplanted by a newer breakthrough is shrinking. This leads to what can be described as a 'knowledge half-life' phenomenon, where facts and insights must be consumed and acted upon within significantly shorter time frames to remain relevant.

This rapid cycle impacts sectors from technology journalism to scientific research dissemination, demanding continuous real-time updates and agility in decision-making.

| Aspect | Data/Trend | Reference |

|---|---|---|

| Median lifespan of AI chips | 2 to 3 years | |

| High-load GPU lifespan | 1 to 3 years | |

| AI model replacement rapidity | New frontier models arrive typically within months | |

| AI performance advancement | 10,000x performance boost every 4 years | |

| Impact on news & discovery timeline | Discovery news life shortens due to rapid innovation |

Artificial Intelligence (AI) is unique among technological fields in that its own models actively drive the discovery and creation of subsequent models. This creates a self-propelling evolution where each new AI breakthrough rapidly replaces the previous generation, leading to a significantly shorter lifespan for each model and accelerating the pace of innovation.

As AI capabilities expand exponentially—demonstrated by more complex tasks, increased knowledge, and improved functionality—the window in which any given model or discovery remains state-of-the-art shrinks drastically. This dynamic means the lifespan of new AI models or scientific findings is indirectly proportional to the velocity at which AI innovation expands.

The trend toward shorter lifespans is evident when looking at recent AI model developments:

OpenAI GPT Series: The original GPT (2018) was surpassed by GPT-2 (2019), then GPT-3 (2020), GPT-3.5 (2022), and GPT-4 (2023). Each new iteration brought significant performance boosts and capabilities but had a market relevance window of roughly 1-2 years before the next version supplanted it.

Transformer Models: After the introduction of the Transformer architecture in 2017, numerous derivations such as BERT (2018) and GPT followed within a year or two. Continual refinements led to models with finer tuning or specialized applications, each quickly followed by more advanced successors.

Reinforcement Learning Agents: AlphaGo (2016), followed by AlphaGo Zero (2017) and AlphaZero (2017), each superseded the last within months, showing how self-play and learning innovations compressed development cycles.

Vision Transformers (ViT) and subsequent vision-language models have replaced traditional CNN approaches at an accelerated pace since 2020.

This rapid obsolescence cycle is not merely limited to AI architectures but includes hardware such as GPUs and TPUs vital for AI training, which often have useful lifespans of 2-3 years in AI-intensive workloads.

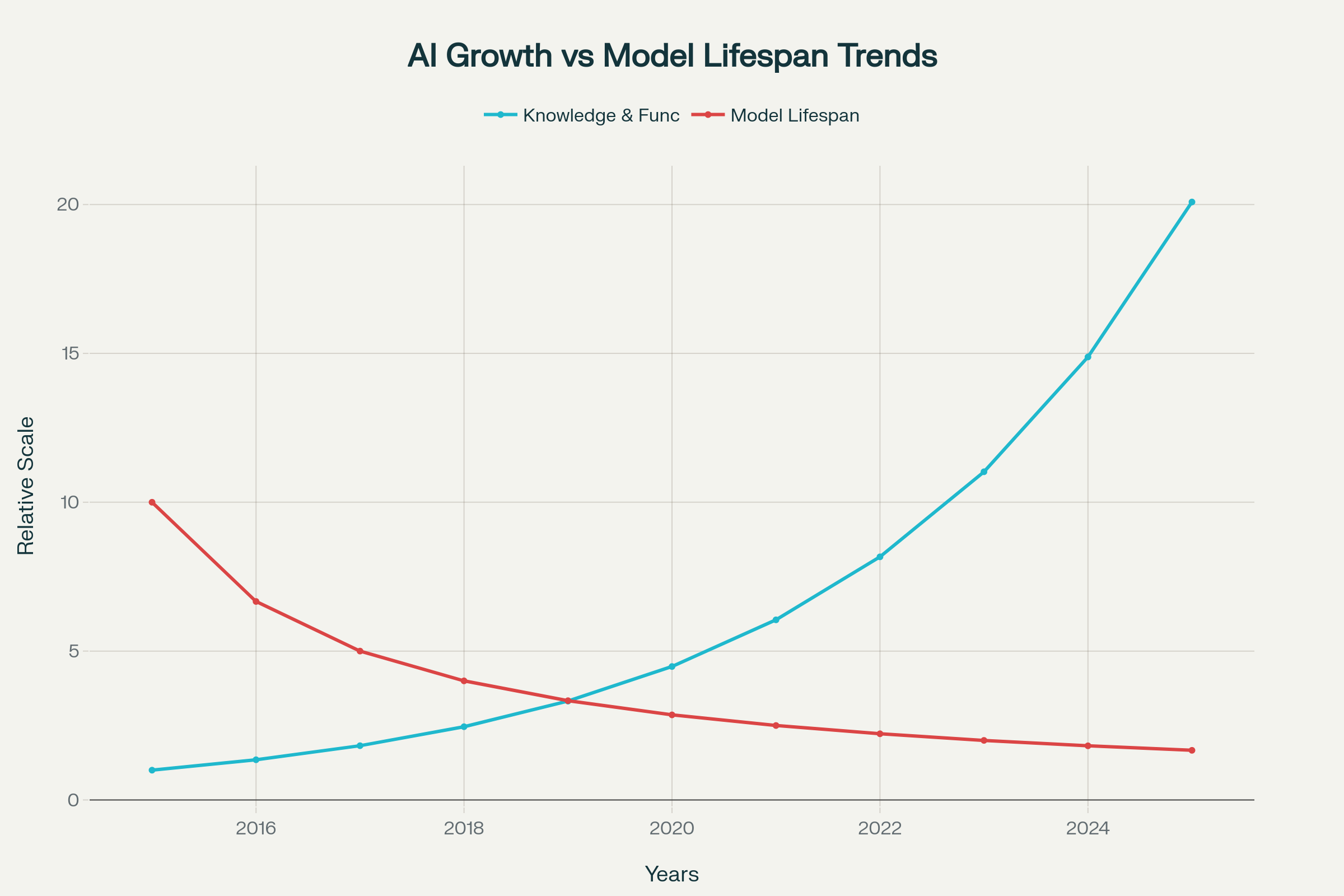

The relationship between AI knowledge growth and shrinking model lifespan is visualized in the below chart. The blue line represents the exponential increase in AI knowledge and model functionality over the years, while the red line inversely shows the decreasing lifespan of models and discoveries. This diagram illustrates how as AI knowledge rapidly flourishes, the time any given model remains cutting-edge correspondingly shortens.

This phenomenon challenges traditional perceptions of news and discovery lifespan. In today's AI-driven world, scientific breakthroughs and technical developments quickly become outdated, necessitating continuous learning and rapid adaptation. Industries dependent on AI innovations must be agile to keep pace with ever-evolving models and technologies.

The concept of a "knowledge half-life" has never been more pertinent. The speed of AI discovery compresses the timeframe for actionable insights, pushing organizations and individuals toward more real-time information consumption environments.

AI models do not just improve the world; they improve themselves. This recursive improvement cycle compresses the lifespan of each model and accelerates innovation. The result is an extraordinary pace of AI progress, where the relevance of any single advance or discovery is fleeting but compounded exponentially into a future of quickly growing capabilities and knowledge.